Chapter 3 The Engagement Trap and Disinformation in the United Kingdom

The framework introduced in the introductory chapter may give the impression that the United Kingdom is in a strong position against the threat of disinformation compared to that of Hungary and the United States: it has a relatively strong trust towards its democratic institutions, a less polarized media and public compared to the United States, and a much higher Liberal Democratic Index (by Varieties of Democracy) score than Hungary. In this sense, the United Kingdom is arguably the closest to conditions in Japan, a country that also enjoys relatively high levels of trust towards its institutions.

This chapter asks what kind of disinformation remains a risk in a country, in this case the United Kingdom, that is not suffering from democratic backsliding. However, with public concern on the rise,[1] the United Kingdom’s case presents one of the most successful cases of what this chapter terms the “engagement trap” which presents a continuing threat. There is nothing new about the threat of disinformation in the United Kingdom. In the case of the United Kingdom, foreign hostile sources such as Russia, China, and Iran have all been accused of having attempted to interfere with its democratic process,[2] making the threat of disinformation of particular concern for the country. This chapter is divided into two settings, the first sets out some of the key examples of disinformation in the United Kingdom, and with the second focusing on the response to tackling disinformation.

This chapter explores how the United Kingdom’s initial response to the use of disinformation in its democratic process (such as elections and referendums) was slower than others such as the United States. This is followed by an analysis of the concept of the “engagement trap” using the “£350 million” claim used during the 2016 EU referendum as an example of a disinformation tactic that successfully utilized this method. It will briefly touch upon the modern use of AI as a disinformation tool in the United Kingdom, and the second section will explore how the government and media responded to the threat. The chapter will also use the example of the North Atlantic Fella Organization (NAFO) and their strategy against Russian disinformation in the war in Ukraine as an example of a successful use of the engagement trap against disinformation. Findings from this chapter will feed into the policy recommendations in the final chapter of this report.

Dragging Their Feet: Initial Slow Response to the Disinformation Threat

One of the earliest examples of modern foreign disinformation activities in the United Kingdom was the Russian electoral interference during the 2014 Scottish referendum.[3] Here, Russia tried to sow doubt over the validity of the referendum result, a tactic that would be repeated in “at least 11 elections” according to a United States intelligence report.[4] This is a rather crude example of the “engagement trap”, which is a disinformation tactic reliant on emotional engagement for the spread of disinformation. It questioned the functioning of one of the key pillars of free and open elections,[5] and tried to widen divisions between the “Yes” and “No” sides by alleging that the vote itself was rigged.

Curiously, when the Intelligence and Security Committee was tasked with investigating Russian interference in the United Kingdom it refrained from analyzing Russian activities in the 2016 EU referendum.[6] To the extent that Russian interference was acknowledged, it was limited to noting that Russian media operating in the United Kingdom such as RT (formerly Russia Today) and Sputnik had taken overtly pro-Leave positions in their coverage.[7] In addition to the delays in the publication of this report, observers were furious that the committee was seemingly unwilling to look into the impact Russian interference may or may not have had in Brexit, something which was of considerable public interest.[8] It might have been agreed that it would be less disruptive to publish the findings after the deadline for the withdrawal negotiations, but this fails to explain why the British government decided to avoid a full investigation into the extent of Russian involvement. Whatever the reason behind the decision, it would be of great interest for the current Labour government to open a full investigation into Russian meddling in the 2016 EU referendum.

It ultimately took the invasion of Ukraine for the United States, the United Kingdom, and the EU to clamp down on Russian disinformation tactics by removing the licenses of both RT and Sputnik to operate in their respective countries.[9] Not even the Salisbury poisoning attack which was an assassination attempt against former Russian double agent Sergei Skripal[10] on United Kingdom soil in 2018 prompted such a reaction.[11] The move had an immediate impact on United States tech giants such as Google, Facebook, and YouTube. The tech companies followed the government lead by banning RT from their servers in Europe (globally in the case of Google and YouTube) effectively stifling the flow of disinformation coming directly from the Kremlin. On one level, it is encouraging to see how swiftly these companies acted, but it is also necessary to acknowledge that it took a full-scale invasion for any action to take place.

Concerns remain over the effectiveness of such censorship as Russian propaganda continues to be broadcast in Spanish and Arabic,[12] and these outlets remain operational in Japan. These drawbacks reveal the necessity of having a greater coordinated international response when it comes to tackling disinformation. However, this also provides a tentative model for a government-led policy response that is amplified with the support of the private sector. This is arguably a key example of the importance of striking “the right balance between governments and firms”[13] in which the government is successful in nudging the private sector to follow its lead. In short, the United Kingdom’s response to disinformation was initially slow and limited, but as the next will show, the “engagement trap” continues to become more sophisticated, increasing the threat of domestic disinformation.

The Engagement Trap

Use of the engagement trap, which is a relatively sophisticated disinformation tactic, is arguably more reminiscent of old-school disinformation tactics that were used in the Cold War period were characterized by carefully tailored content that strategically targeted small groups.[14] Modern forms of disinformation place greater value on the quantity of disinformation.[15] The United Kingdom is no stranger to the risks of disinformation, and some of the more successful examples show how what this chapter terms the “engagement trap” is effectively deployed. The “engagement trap” is defined as a specific disinformation tactic which twists the truth and makes it emotionally engaging to maintain maximum engagement with the aim of spreading a narrative that is beneficial to the perpetrator. This definition is based on numerous previous studies on disinformation that have contemplated how and why disinformation is effective.[16] The current disinformation literature finds that people are more likely to believe disinformation if it comes from a trusted source, if the information confirms their existing understanding of reality, or if the content is emotionally engaging.[17] Social media in general functions on the basis of an “an attention economy”,[18] where maintaining the customer’s attention for as long as possible is the priority and thus leading to “moral and emotional” content being created with hopes of becoming viral.[19] People may also be attracted to disinformation as a coping mechanism when they are faced with insufficient information and desires to make sense of reality by filling the gaps in knowledge through myths and hearsay.[20] Studies on conspiracy theories related to COVID-19 also showed that believers are encouraged to conduct their own research, and are referred to as “awake” readers which nudges them into becoming more active and engaged with disinformation.[21] These studies indicate that disinformation has a strong incentive to keep the public engaged in their content. Such reverse use of psychological responses to its own advantage is reminiscent of the so-called “perception hack” which shows perpetrators deliberately exaggerating the extent of their influence to amplify public concern and distrust.[22]

However, the United Kingdom is a robust liberal democracy with generally healthy levels of trust towards its key institutions. In this regard, the United Kingdom’s context has some similarities as well as glaring differences to that of the other case studies in this report. As mentioned in the introduction, its Liberal Democracy Index has been consistently high in contrast to the United States and especially Hungary. Its overall media trust is similar to that of Hungary, the United States, and Japan at 33 per cent (in Hungary it is 25 per cent, the United States is 32 per cent, and Japan is 42 per cent).[23] However, this overall low level of trust masks the fact that brands such as the BBC score high levels of trust with 61 per cent saying they trust BBC News which also dominates viewership as well.[24] Additionally, the British media in general is less polarized compared to places like the United States, with a healthy balance between sources that are considered left-leaning and those regarded as right-leaning.[25] Trust towards the government in the United Kingdom is also relatively high. The World Values Survey finds that as of 2017-2022, when respondents were asked to score their trust towards how democratically their government was run, out of 1-10 (lowest to highest level of trust) the United Kingdom had a mean of 6.56 (slightly higher than the global average of 6.25).[26] In short, the United Kingdom shows a healthy level of trust towards the media and government, making it less likely to fall victim to disinformation.

Furthermore, disinformation does not always find it easy to reach its intended audience. For example, a study by the Reuters Institute at the University of Oxford found that as of 2017, just 3.5 per cent of the online public accessed disinformation websites.[27] There is thus a cap on the number of viewers such websites can reach. The greater problem thus comes from disinformation that manages to become more mainstream, those that can overcome the barrier created by the robust liberal democratic values embodied by the key institutions, and ones that thrive under increased engagement.

In a similar vein, disinformation is likely to be more effective when it targets pre-established ideals. Arguably, there is nobody better to turn to in understanding disinformation than those who create it. As a former Soviet Union intelligence officer, Ladislav Bittman noted, disinformation needs to “at least partially respond to reality, or at least accepted views”.[28] An example of this is the claim that the £350 million per week that was sent to the EU should instead have been used for the NHS, which was emblazoned on a bus. This was one of the more successful campaigns from Vote Leave during the 2016 EU referendum. This claim enraged the Remain side, prompting some to call it a Brexit lie.[29] Fact checking websites such as Full Fact offered their verdicts by arguing that according to official data, the amount was closer to “£250 million”.[30] The Remain campaign, keen to fight the Leave side on a similar front, brought in the argument that Brexit would cost the British household “£4,300”.[31] However, while the claim made by the Remain side faced ridicule and was labeled “project fear”,[32] the “£350 million” claim stuck. Two years after the referendum, despite the highly misleading figures, people still believed the claim to be true.[33]

However, this presents an interesting puzzle. Why was the Remain side’s argument so easily brushed aside, while the Leave side’s claim was widely accepted? The key to understanding the success of the Leave campaign lies in what this chapter terms the “engagement trap”, a form of disinformation which thrives in interaction from its opponents. Just as there is no such thing as bad publicity, when it comes to disinformation, the engagement does not have to be all positive. In the words of Dominic Cummings, who was head of Vote Leave, the “£350 million” claim was intended as “a deliberate trap” to try and drive the Remain campaign and the people running it “crazy”.[34] Since the data it used drew from official sources, the Remain side was forced to try and explain the complicated rebate system,[35] something that does not necessarily work within the context of a political campaign which often relies on catchy soundbites.[36] The more the Remain side tried to argue back, the more it emphasized in the public mind what he called the “real balance sheet” of EU membership,[37] thus getting more entangled in the engagement trap.

A New Level of Threat to Democracy

Since the 2016 EU referendum, disinformation has become more sophisticated with a greater arsenal of readily available technology to help spread disinformation, and hence a greater sophistication of the “engagement trap”. Concerns over the threat of disinformation were already mounting in July 2024 when the United Kingdom was preparing for a general election.[38] Even before an election was triggered, there were already early indications of how the new AI technology could be used. Coinciding with the start of the Labour Party Conference on 8 October 2023, falsified audio of the Labour Party leader, Sir Keir Starmer, berating a staff member over a tablet was uploaded on X (formerly Twitter).[39] Roughly a month later, another falsified audio clip of London Mayor Sadiq Khan was uploaded on TikTok.[40] In the clip, Khan can be heard to downplay Armistice Day (a day of remembrance of the sacrifices made for the war effort and a call for peace) while heaping praise on pro-Palestinian protests.

The use of AI to manipulate audio and video of politicians is not unique to the United Kingdom. For example, a video of former Prime Minister Kishida spewing “vulgar statements” had been made with the help of AI.[41] The video was taken down by the creator who admitted that it was an ill attempt at humor.[42] While this incident shows how easy and accessible such AI technology has become, the examples of Starmer and Khan differ from this in that it was created with a clear intent to use disinformation to create division within society, by drawing the public into another engagement trap.

The timing of the two audio clips, one before the start of the annual Labour Party Conference and the other before Armistice Day, shows clear political motivation behind the release of the clips. In particular, the second clip of Khan was released in the lead up to a particularly sensitive day for the United Kingdom. Armistice Day, or Remembrance Day, arguably has a similar place in terms of importance and reverence as the Hiroshima Peace Memorial Day and Nagasaki Memorial Day in Japan. Far-right protesters including Stephen Yaxley-Lennon (known as Tommy Robinson) who had led the now defunct far-right group the English Defence League were energized to turn up as counter-protestors on the day, leading to nine police officers being injured and more than a hundred arrests made.[43] While it is unclear to what degree the audio clip had an impact on this, the emergence of both clips promises that such disinformation tactics are here to stay.

Combating Disinformation

In general, there are three types of actors that are considered essential when it comes to tackling disinformation, namely the public sector (including the government and educational institutions), the private sector (large technology firms and the media), and the public.[44] This section explores some of the ways in which the United Kingdom government and media have attempted to address the threat of disinformation. The section will also explore how online grassroots organizations such as NAFO, with members in the United Kingdom and around the globe, have been successful at overcoming the engagement trap.

United Kingdom Policy Response

As previously noted, the United Kingdom was initially slow to react to the threat of disinformation, exemplified by the delay in banning foreign agents such as the RT and Sputnik, as well as an unwillingness to investigate Russian influence in the 2016 EU referendum. In contrast, the United States acted quickly after the 2016 United States Presidential election in dealing with Russian media. In January 2017 a report on Russian interference in the 2016 United States Presidential election was declassified and it found that media companies such as RT to have actively peddled pro-Trump and anti-Clinton disinformation in the United States,[45] resulting in RT being registered as a “foreign agent”, setting the tone of the United States’ response to Russian interference in its domestic affairs.[46] In short, the United Kingdom’s response was slower and less far reaching compared to the United States.

This arguably changed in recent years. At the government level, the United Kingdom has been ahead of the curve by introducing legislation that aimed to tackle disinformation. In the process, it also took a central position in leading an international response against its threat. The Online Safety Act 2023 passed on October 26, 2023, had appointed Ofcom as the main regulator of online illegal activities such as child sexual abuse materials and materials more broadly that could be deemed harmful to children. One of the breakthroughs this legislation achieved was to bring in some regulatory teeth to online activities, threatening companies with either a £18 million fine or 10 per cent of their worldwide revenue if they were found to have failed to comply with the new regulation.[47] The Act has some drawbacks, such as its focus on regulating illegal acts while its ability to regulate gray zones such as misinformation and disinformation remain relatively weak.[48] Questions remain as to how far the private sector could comply with the new regulations such as the requirement for age verification and checking personal messages,[49] and the age-old question over freedom of expression remains a concern.[50] Despite such limitations, the United Kingdom is following in the footsteps of the EU’s Digital Services Act (which came into effect on August 25, 2023)[51] as well as its Code of Practice (which was updated in 2022)[52] in trying to hold companies accountable for harmful content online and mitigating risks to its public, a move that would be in the interest of Japan to follow.

The United Kingdom also hosted the first AI Safety Summit 2023 between November 1 and 2, 2023, bringing in 46 universities and civil society groups, 40 businesses, 28 states, and seven multilateral organizations to address the threats posed by AI.[53] The summit presented an opportunity for stakeholder discussions as well as pressuring companies to submit their AI policies for greater transparency.[54] While the Summit’s main focus was not on disinformation, it signals a growing willingness for international cooperation in tackling the issue of frontier technology which could be used for disinformation purposes. Taken together, the Online Safety Act 2023 and the AI Safety Summit 2023 present a new phase in the fight against disinformation. An obvious route for Japan would be to further accelerate the push towards international cooperation in this field and take on a leading role in shaping the international response to the threat of disinformation.

Not all measures by the United Kingdom are either global or far-reaching as the AI Safety Summit or the Online Safety Act. In terms of combating disinformation through education, the Department for Digital, Culture, Media & Sport launched the Online Media Literacy Strategy in 2021 with an initial budget of £340k on training educators, carers, and librarians on media literacy.[55] In essence, this acted as a stop-gap measure[56] as the government prepares for larger scale regulatory measures. Although this strategy brings in the right stakeholders who deal directly with some of the most vulnerable groups of society (i.e. carers and guardians of the elderly, children and the disabled), the budget is considerably smaller than the task at hand would necessitate. The challenge for the United Kingdom would thus be to ensure that such policies are backed by sufficient funds.

In the case of Japan, the Ministry of Internal Affairs and Communications launched a website with educational materials aimed at both the elderly and young people and their carers to improve online media literacy in 2022.[57] Similarly, the Center for Global Communications at the International University of Japan [GLOCOM] also developed educational materials through collaboration with the private sector.[58] However, the public are in general less concerned with the threat posed by disinformation compared to other countries with 53.6 per cent of respondents having never heard of “fact checking” (just 4.8 per cent of United States respondents and 3.4 per cent of Korean respondents had never heard of the word).[59] Thus, Japan needs to take seriously the need for increasing public awareness towards the threat of disinformation, and help them familiarize with the countermeasures that are in place such as fact checking services.

Media and Fact Checks

The previous chapter on Hungary illustrated in detail how a lack of independent media can become a catalyst of disinformation to be shared from the government level. In contrast, news organizations in the United Kingdom are at the forefront of tackling disinformation – providing solutions rather than being the source of the problem. Since those who are most susceptible to kinds of disinformation such as conspiracy theories are encouraged to conduct their own research,[60] one possible counter to this would be to make it easier for people to access the right information. The United Kingdom has already taken tentative steps towards implementing this by introducing the Link Attribution Protocol by the Association of Online Publishers [AOP].[61] The protocol encourages major media outlets to provide fair attribution to the original source material. While this was originally developed to address the issue of scoop theft and was somewhat limited in scope due to it being done on a participatory basis and the forward link being limited to between media sources, it nonetheless presents a clear pathway towards more transparent use of sources. If implemented effectively, it could also make it easier for readers to access the correct information.

Established public institutions such as the BBC, which enjoys a prominent position on both traditional and internet-enabled TV alongside other public media,[62] is capable of a truly global reach through its BBC Global Services that transmit their news in 42 different languages, allowing it to support not only the United Kingdom, but also other countries in the quest of tackling disinformation.[63] The BBC has a program called Verify (formerly Reality Check) as its main fact checking service. One key feature of BBC Verify is it allows viewers to submit suggested topics that they want the BBC to check the validity of. By offering the service as demand-based, BBC Verify can ensure to a degree that the topics they decide to verify are of public interest. Simultaneously, this also reduces the burden on journalists to find the topics themselves (a known problem)[64] making the process that much more efficient.

Yet the BBC is also far from being perfect. Its requirement of impartiality means that it is required to provide “ensure a wide range of significant views and perspectives are given due weight and prominence, particularly when the controversy is active”.[65] This could sometimes lead to unintentionally giving equal prominence to viewpoints that are not equal in terms of their validity. Such a narrow interpretation of impartiality has been criticized for resulting in the prioritization of balance in terms of the airtime provided than the content of the information,[66] giving undue prominence to views that are more niche, and in some cases providing room for disinformation to spread.

However, arguably one of the greatest issues that fact checking services face is the “engagement trap”. The coverage of the disinformation itself, as was the case with the “£350 million” claim during the 2016 EU referendum, may be designed to be most effective when the information is dissected, and the topic remains part of public discussion. Fact checkers have yet to come up with a clear strategy to tackle the problem of disinformation campaigns that are actively seeking engagement. Arguably, the tactic that has been the most effective in dealing with the engagement trap has been the ones that have been successful at utilizing the same tactics.

The Engagement Trap as an Anti-disinformation Tool?

The “£350 million” claim was used to illustrate how the engagement trap could be used as a key disinformation tool. However, this tactic can also be used to combat disinformation. Arguably no other organization has mastered this better than NAFO, the online fighters of Russian disinformation that use memes as their main weapon against disinformation. NAFO was co-created by Matt Moores in May 2022 at first “as a joke” to poke fun at the Russian statements.[67]

Moores listed some of the key strengths of NAFO as being its organic development, effective use of internet culture, and the use of ideas as its unifier.[68] The lack of strategic planning meant that as an organization it is flexible and capable of adapting to a rapidly changing environment. Since the posts rely on preexisting internet culture,[69] members can easily pick up and mimic the style of NAFO posts without much guidance. This is why despite the relative few posts made by the main NAFO account,[70] its reach remains far and wide thanks to fellow “fellas” who have shiba inu dogs as their icons and help post memes and tip offs when they spot a pro-Russian post to ridicule. This is achieved through known shared hashtags such as #NAFOArticle5 (a reference to NATO Article 5’s principle of collective defense).[71] NAFO is not the first to tactically use internet culture for political purposes. In 2020, fans of K-pop group BTS made headlines when they succeeded in drowning out the #WhiteLivesMatter which developed in response to the #BlackLivesMatter movement.[72] Such use of hashtag (#) hijacking is a unique social media strategy that works within the context of the internet culture on X, which feeds on engagement.

However, unlike the case of K-pop fandom, what unites NAFO is not a group but a shared idea.[73] Without a physical base or an organizational structure, there is no clear target that Russia can attack or infiltrate, making NAFO immune to some of the usual infiltration tactics that have dogged academia,[74] politics,[75] and local communities.[76] When the Russians do try to infiltrate, NAFO members are quick to warn others, effectively self-policing their activities without the need for external intervention. Such flexibility is unlikely to be replicated by either government agencies, the media, or dedicated fact checking services. In short, the engagement trap can be harnessed into a key tool against disinformation in the right hands. It remains to be seen whether similar movements to NAFO will appear in either the United Kingdom or Japan, but as liberal democracies, they both have the right ingredients for such organizations to flourish.

Conclusion

This chapter explored how disinformation has evolved in the United Kingdom, a country that has hitherto managed to contain the threat of disinformation. In contrast to the previous two chapters, the threat of disinformation manifests within the context of a relatively robust set of democratic institutions. Similar to Japan, it has higher levels of trust towards the government and the media, and a more balanced media landscape, in contrast to the previous two case studies.

However, the chapter has shown that the United Kingdom continues to face an evolving threat from disinformation, from relatively crude initial attempts using social media bots to sow divisions during the 2014 Scottish referendum, to a more sophisticated use of the “engagement trap” during the 2016 EU referendum, and finally the targeted use of AI in spreading disinformation in politically sensitive times. Despite the seemingly slow and unwilling initial response to the threat, the United Kingdom government has been ahead of the curve when it comes to tackling disinformation through international cooperation and toughening regulations. The media and fact checking services in the United Kingdom made headway in terms of debunking some of the disinformation spread online, but they were unable to adequately deal with the “engagement trap”. This chapter argued that grassroots movements such as NAFO are a prime example of how the “engagement trap” could be used to discredit disinformation. The United Kingdom currently enjoys relatively robust liberal democratic institutions that can insulate it from disinformation, yet it cannot remain complacent in the face of an ever-more sophisticated threat posed by disinformation from both internal and external forces. The United Kingdom’s case shows that despite the challenges, there are existing tools to combat disinformation. For countries such as Japan, actively employing help from grassroots communities may allow it to effectively manage the threat posed by disinformation.

footnote

- [1] Mark McGeoghegan, “Reputation resilience in the age of ‘fake news’,” Ipsos, August 7, 2019, https://www.ipsos.com/en-uk/reputation-resilience-age-fake-news.

- [2] Commonwealth, Parliamentary Debates, House of Commons, May 22, 2023, vol.733 col 16 (Christian Wakeford, Labour MP).

- [3] House of Commons, Intelligence and Security Committee of Parliament (London: House of Commons, July 21, 2020), 13, https://isc.independent.gov.uk/wp-content/uploads/2021/03/CCS207_CCS0221966010-001_Russia-Report-v02-Web_Accessible.pdf.

- [4] AFP in Washington, “Russia working to undermine trust in elections globally, US intelligence says,” The Guardian, October 20, 2023, https://www.theguardian.com/world/2023/oct/20/russia-spy-network-elections-democracy-us-intelligence.

- [5] Steven Levitsky and Lucan A Way, “The Rise of Competitive Authoritarianism,” Journal of democracy 13, no. 2 (2002): 51–65, https://www.journalofdemocracy.org/articles/elections-without-democracy-the-rise-of-competitive-authoritarianism/.

- [6] House of Commons, Intelligence.

- [7] Ibid.

- [8] Donatienne Ruy, “Did Russia Influence Brexit?” Center for Strategic and International Studies, July 21, 2020, https://www.csis.org/blogs/brexit-bits-bobs-and-blogs/did-russia-influence-brexit; Nick Winey, “A country under the influence: Russian interference in British politics,” European Council on Foreign Relations, July 23, 2020, https://ecfr.eu/article/commentary_a_country_under_the_influence_russian_interference_in_british_po/.

- [9] BBC News, “RT: Russian-backed TV news channel disappears from UK screens,” BBC News, March 03, 2022, https://www.bbc.com/news/entertainment-arts-60584092;

Gerrit De Vynck, “E.U. sanctions demand Google block Russian state media from search results,” The Washington Post, March 09, 2022, https://www.washingtonpost.com/technology/2022/03/09/eu-google-sanctions/. - [10] Marie Lennon, “Salisbury Novichok poisoning medics ‘still traumatised’,” BBC News, February 23, 2023, https://www.bbc.com/news/uk-england-wiltshire-64742249.

- [11] Gordon Corera, “Salisbury poisoning: What did the attack mean for the UK and Russia?” BBC News, March 04, 2020, https://www.bbc.com/news/uk-51722301.

- [12] Elizabeth Dwoskin, Jeremy B. Merrill, and Gerrit De Vynck, “Social platforms’ bans muffle Russian state media propaganda,” The Washington Post, March 16, 2022, https://www.washingtonpost.com/technology/2022/03/16/facebook-youtube-russian-bans/.

For further information on disinformation from RT and Sputnik, please refer to the following two reports which provide detailed evidence. Gordon Ramsay and Sam Robertshaw, Weaponising news: RT, Sputnik and targeted disinformation (London: King’s College London, the Policy Institute Centre for the Study of Media, Communication & Power, 2019), https://www.kcl.ac.uk/policy-institute/assets/weaponising-news.pdf; U.S. Department of State Global Engagement Center, Kremlin-Funded Media: RT and Sputnik’s role in Russia’s disinformation and propaganda ecosystem (Washington D.C.: U.S. Department of State, 2022), https://www.state.gov/wp-content/uploads/2022/01/Kremlin-Funded-Media_January_update-19.pdf. - [13] Paul Nadeau, IOG Economic Intelligence Report Vol. 3 No.2 (Tokyo: Institute of Geoeconomics, January 22, 2023), 3, https://apinitiative.org/GaIeyudaTuFo/wp-content/uploads/2024/01/IOG-Economic-Intelligence-Report-Late-January.pdf.

- [14] Thomas Rid, Active Measures: The Secret History of Disinformation and Political Warfare (New York: Picador, 2020).

- [15] Christopher Paul and Miriam Matthews, The Russian “Firehose of Falsehood” Propaganda Model: Why It Might Work and Options to Counter It (Santa Monica: RAND Corporation, 2016), https://www.rand.org/pubs/perspectives/PE198.html.

- [16] Ullrich K. H. Ecker et al., “The psychological drivers of misinformation belief and its resistance to correction,” Nature Reviews Psychology 1 (2022): 13-29, https://doi.org/10.1038/s44159-021-00006-y.

- [17] Ibid.

- [18] James Williams, Stand out of our light: Freedom and resistance in the attentional economy (New York: Cambridge University Press, 2018).

- [19] William J. Bracy, Ana P. Gantman, and Jay Joseph Van Bavel, “Attentional capture helps explain why moral and emotional content go viral,” Journal of Experimental Psychology General 149, no.4 (April 2020): 746-756, 754, https://doi.org/10.1037/xge0000673.

- [20] P. M. Kraft and Joan Donovan, “Disinformation by design: The Use of Evidence Collages and Platform Filtering in a Media Manipulation Campaign,” Political Communication 37, no.2 (2020): 194-214, https://doi.org/10.1080/10584609.2019.1686094.

- [21] Rod Dacombe, Nicole Souter, and Lumi Westerlund, “Research note: Understanding offline Covid-19 conspiracy theories: A content analysis of The Light “truthpaper”,” Misinformation Review 2, no.5 (September 2021): 1-11, https://doi.org/10.37016/mr-2020-80.

- [22] Nathaniel Gleicher, “Removing Coordinated Inauthentic Behavior,” Meta, October 27, 2020, https://about.fb.com/news/2020/10/removing-coordinated-inauthentic-behavior-mexico-iran-myanmar/. Research has found that media trust leads to greater engagement with media output which may strengthen lead to greater media literacy. For research on this topic, please refer to the following articles. Ann E. Williams, 2012, “Trust or Bust?: Questioning the Relationship Between Media Trust and News Attention,” Journal of Broadcasting & Electronic Media 56, no.1 (2012): 116–131, https://doi.org/10.1080/08838151.2011.651186; Jason Turcotte, Chance York, Jacob Irving, Rosanne M. Scholl, Raymond J. Pingree, “News Recommendations from Social Media Opinion Leaders: Effects on Media Trust and Information Seeking,” Journal of Computer-Mediated Communication 20, no.5 (September 2015): 520–535, https://doi.org/10.1111/jcc4.12127. Media trust has also been found to improve media consumption as found in this study: Strömbäck, Yariv Tsfati, Hajo Boomgaarden, Alyt Damstra, Elina Lindgren, Rens Vliegenthart, and Torun Lindholm, “News Media Trust and Its Impact on Media Use: Toward a Framework for Future Research,” Annals of the International Communication Association 44, no.2 (2020): 139–156, https://doi.org/10.1080/23808985.2020.1755338. Research has also found a causation between distrust in the media and belief in fake news (and vice versa): Altay, Benjamin A. Lyons and Ariana Modirrousta-Galian, “Exposure to Higher Rates of False News Erodes Media

Trust and Fuels Overconfidence,” Mass Communication and Society (August 2024): 1–25, https://doi.org/10.1080/15205436.2024.2382776; Katherine Ognyanova, David M. J. Lazer, Ronald E. Robertson and Christo Wilson, “Misinformation in action: Fake news exposure is linked to lower trust in media, higher trust in government when your side is in

power,” Harvard Kennedy School Misinformation Review 1, no.4 (2020): 1-19, https://doi.org/10.37016/mr-2020-024. - [23] Richard Fletcher, Alessio Cornia, Lucas Graves, and Rasmus Kleis Nielsen, Measuring the reach of “fake news” and online disinformation in Europe (Oxford: Reuters Institute, 2018), 59, 81, 109 & 135, https://reutersinstitute.politics.ox.ac.uk/sites/default/files/2018-02/Measuring%20the%20reach%20of%20fake%20news%20and%20online%20distribution%20in%20Europe%20CORRECT%20FLAG.pdf.

- [24] Ibid, 59.

- [25] Nic Newman and Richard Fletcher, Digital News Project 2017 Bias, Bullshit and Lies: Audience Perspectives on Low Trust in the Media (Oxford: Reuters Institute, 2017), 20, https://reutersinstitute.politics.ox.ac.uk/sites/default/files/2017-11/Nic%20Newman%20and%20Richard%20Fletcher%20-%20Bias%2C%20Bullshit%20and%20Lies%20-%20Report.pdf.

- [26] Christian Haerpfer et al. eds., World Values Survey: Round Seven – Country-Pooled Datafile Version 6.0. (Madrid, Spain & Vienna, Austria: JD Systems Institute & WVSA Secretariat, 2022), https://doi.org/10.14281/18241.20.

- [27] Richard Fletcher, Alessio Cornia, Lucas Graves, and Rasmus Kleis Nielsen, Measuring the reach of “fake news” and online disinformation in Europe (Oxford: Reuters Institute, 2018), 1, https://reutersinstitute.politics.ox.ac.uk/sites/default/files/2018-02/Measuring%20the%20reach%20of%20fake%20news%20and%20online%20distribution%20in%20Europe%20CORRECT%20FLAG.pdf.

- [28] Rid, Active Measures, 5.

- [29] Toby Helm, “Brexit camp abandons £350m-a-week NHS funding pledge,” The Guardian, September 10, 2016, https://www.theguardian.com/politics/2016/sep/10/brexit-camp-abandons-350-million-pound-nhs-pledge.

- [30] “£350 million EU claim “a clear misuse of official statistics,” Full Fact, last modified September 19, 2017, https://fullfact.org/europe/350-million-week-boris-johnson-statistics-authority-misuse/.

- [31] Larry Elliott, “Will each UK household be £4,300 worse off if the UK leaves the EU?” The Guardian, April 18, 2016, https://www.theguardian.com/politics/2016/apr/18/eu-referendum-reality-check-uk-households-worse-off-brexit.

- [32] Annabelle Dickson, “Remainer Brexit scares and reality,” Politico, October 10, 2017, https://www.politico.eu/article/accurate-prophecy-or-misleading-project-fear-how-referendum-claims-have-panned-out-so-far/.

- [33] The Policy Institute, Brexit misperceptions (London: The Policy Institute, King’s College London, 2018), 9, https://www.kcl.ac.uk/policy-institute/assets/brexit-misperceptions.pdf.

- [34] BBC, “Dominic Cummings – The Interview – BBC News Special – 20th July 2021,” filmed July 20, 2021 at BBC, London, video, 25:01 & 25:27, https://youtu.be/ddHN2qmvCG0?feature=shared.

- [35] Ibid, 26:22.

- [36] Eike Mark Rinke, “The Impact of Sound-Bite Journalism on Public Argument,” Journal of Communication 66 (2016): 625-645, 626, https://doi.org/10.1111/jcom.12246.

- [37] BBC, “Dominic Cummings,” 25:21.

- [38] Michael Savage, “Call for action on deepfakes as fears grow among MPs over election threat,” The Guardian, January 21, 2024, https://www.theguardian.com/politics/2024/jan/21/call-for-action-on-deepfakes-as-fears-grow-among-mps-over-election-threat.

- [39] Sky News, “Deepfake audio of Sir Keir Starmer released on first day of Labour conference,” Sky News, October 09, 2023, video, https://news.sky.com/story/labour-faces-political-attack-after-deepfake-audio-is-posted-of-sir-keir-starmer-12980181.

- [40] Dan Sabbagh, “Faked audio of Sadiq Khan dismissing Armistice Day shared among far-right groups,” The Guardian, November 10, 2023, https://www.theguardian.com/politics/2023/nov/10/faked-audio-sadiq-khan-armistice-day-shared-among-far-right.

- [41] The Yomiuri Shimbun, “Fake Video of Japan Prime Minister Kishida Triggers Fears of AI Being Used to Spread Misinformation,” The Yomiuri Shimbun,

November 04, 2023, https://japannews.yomiuri.co.jp/politics/politics-government/20231104-147695/. - [42] The Asahi Shimbun, “Fake video of Kishida spreads; creator denies malicious intent,” The Asahi Shimbun, November 05, 2023, https://www.asahi.com/ajw/articles/15048963.

- [43] Thomas Mackintosh and Emily Atkinson, “London protests: Met condemns ‘extreme violence’ of far-right,” BBC News, November 12, 2023, https://www.bbc.com/news/uk-67390514.

- [44] Darrell M. West, “How to combat fake news and disinformation,” Brookings, December 18, 2017, https://www.brookings.edu/articles/how-to-combat-fake-news-and-disinformation/.

- [45] Intelligence Community Assessment, Assessing Russian Activities and Intentions in Recent US Elections: The Analytic Process and Cyber Incident

Attribution (Washington D.C.: Office of the Director of National Intelligence, January 06, 2017), https://www.dni.gov/files/documents/ICA_2017_01.pdf. - [46] Jack Stubbs and Ginger Gibson, “Russia’s RT America registered as ‘foreign agent’ in U.S.,” Reuters, November 14, 2017, https://www.reuters.com/article/idUSKBN1DD29L/.

- [47] Online Safety Act 2023, 2023, chap.6.

- [48] “The Online Safety Act and Misinformation: What you need to know,” Full Fact, accessed February 02, 2024, https://fullfact.org/about/policy/online-safety-bill/.

- [49] Peter Guest, “The UK’s Controversial Online Safety Act Is Now Law,” Wired, October 26, 2023, https://www.wired.co.uk/article/the-uks-controversial-online-safety-act-is-now-law.

- [50] Sarah Dawood, “Will the Online Safety Act protect us or infringe our freedoms?” The New Statesman, November 17, 2023, https://www.newstatesman.com/spotlight/tech-regulation/online-safety/2023/11/online-safety-act-law-bill-internet-regulation-free-speech-children-safe.

- [51] “The Digital Services Act,” European Commission, accessed March 05, 2024, https://commission.europa.eu/strategy-and-policy/priorities-2019-2024/europe-fit-digital-age/digital-services-act_en#:~:text=Digital%20Services%20Act%20(DSA)%20overview,and%20the%20spread%20of%20disinformation.

- [52] “A strengthened EU Code of Practice on Disinformation,” European Commission, accessed February 02, 2024, https://commission.europa.eu/strategy-and-policy/priorities-2019-2024/new-push-european-democracy/protecting-democracy/strengthened-eu-code-practice-disinformation_en#chronology-of-eu-actions-against-disinformation.

- [53] “Guidance: AI Safety Summit: confirmed attendees (governments and organisations),” Department for Science, Innovation & Technology, last modified October

31, 2023, https://www.gov.uk/government/publications/ai-safety-summit-introduction/ai-safety-summit-confirmed-governments-and-organisations. - [54] “Policy Updates,” UK Government, accessed February 02, 2024, https://www.aisafetysummit.gov.uk/policy-updates/#company-policies.

- [55] “New story: Minister launches new strategy to fight online disinformation,” GOV.UK, last modified July 14, 2021, https://www.gov.uk/government/news/minister-launches-new-strategy-to-fight-online-disinformation.

- [56] H. Andrew Schwartz, “#NAFO Co-Founder Matt Moores on Combatting Russian Disinformation,” The Truth of the Matter (podcast), October 13, 2022, accessed February

01, 2024, https://www.csis.org/podcasts/truth-matter/nafo-co-founder-matt-moores-combatting-russian-disinformation. - [57] “【啓発教育教材】インターネットとの向き合い方~ニセ・誤情報に騙されないために~ [Educational Material, How to work with the Internet and how to not fall for mis- and disinformation],” Ministry of Internal Affairs and Communications, accessed March 05, 2024, https://www.soumu.go.jp/use_the_internet_wisely/special/nisegojouhou.

- [58] Ministry of Internal Affairs and Communications, “ネット&SNS よりよくつかって未来をつくろうICT活用リテラシー向上プロジェクトについて, [Create a future with better use of the internet & SNS: On improving media literacy through the use of ICT],” Ministry of Internal Affairs and Communications, accessed March 05, 2024, https://www.ict-mirai.jp/about/.

- [59] Ministry of Internal Affairs and Communications, 令和5年「情報通信に関する現状報告」(令和5年版情報通信白書): 新時代に求められる強靱・健全なデータ流通社会の実現に向けて [2023 Current Report on Information Communication” (2923 Information Communication White Paper): Towards realizing a strong and healthy data society of the new era] (Tokyo: Ministry of Internal Affairs and Communications, July 2023), https://www.soumu.go.jp/johotsusintokei/whitepaper/ja/r05/summary/summary01.pdf.

- [60] Dacombe et al., “Research note”.

- [61] “AOP Link Attribution Protocol; Summary of Principles,” Advocate for Quality Original Digital Content, accessed June 07 2024, https://www.ukaop.org/hub/initiatives/aop-link-attribution-protocol-summary-of-principles.

- [62] Ofcom, “Review of prominence for public service broadcasting,” Ofcom, July 25, 2018, https://www.ofcom.org.uk/consultations-and-statements/category-1/epg-code-prominence-regime. Discussions are ongoing over introducing this in Japan (Foundation for MultiMedia Communications, “諸外国における放送インフラやネット配信の先進事例について [On online and broadcasting infrastructure cases in other countries],” Ministry of Internal Affairs and Communications, December 15, 2021, https://www.soumu.go.jp/main_content/000782843.pdf.).

- [63] UK Parliament, BBC – written evidence (DAD0062) (London: UK Parliament, no date), https://committees.parliament.uk/writtenevidence/429/pdf/.

- [64] NHK, “国内メディアによる「ファクトチェック」②(テレビ)【研究員の視点】#519 [Fact Checks” by domestic media, no.2 (TV) 【from the perspective of researchers】#519],” Bunken Blog, December 22, 2023, https://www.nhk.or.jp/bunken-blog/500/490452.html.

- [65] “Section 4: Impartiality – Guidelines,” BBC, accessed March 05, 2024, https://www.bbc.com/editorialguidelines/guidelines/impartiality/guidelines.

- [66] Stephen Cushion, “Impartially, statistical tit-for-tats and the construction of balance: UK television news reporting of the 2016 EU referendum campaign,” European Journal of Communication 32, no.3 (2017): 208-223, https://doi.org/10.1177/0267323117695736.

- [67] Schwartz, “#NAFO”.

- [68] Ibid.

- [69] Ibid.

- [70] Eva Johais, “Memetic warfare in the Russo-Ukrainian conflict: Fieldnotes from digital ethnography of #NAFO,” in WARFUN diaries volume 2, ed. Antonio De Lauri (Bergen: Chr. Michelsen Institute, 2023), 35-38, https://research.fak.dk/view/pdfCoverPage?instCode=45FBI_INST&filePid=1347401180003741&download=true#page=36.

- [71] “Collective defence and Article 5,” North Atlantic Treaty Organization, last modified July 04, 2023, https://www.nato.int/cps/en/natohq/topics_110496.htm.

- [72] BBC News, “K-pop fans drown out #WhiteLivesMatter hashtag,” BBC News, June 4, 2020, https://www.bbc.com/news/technology-52922035.

- [73] Schwartz, “#NAFO”.

- [74] Emily Dixon, “Russian academic quits Estonian university after espionage arrest,” Times Higher Education, January 17, 2024, https://www.timeshighereducation.com/news/russian-academic-quits-estonian-university-after-espionage-arrest.

- [75] Naomi O’Leary, “Latvian MEP linked to Wallace and Daly accused of working with Russian intelligence,” The Irish Times, January 29, 2024, https://www.irishtimes.com/politics/2024/01/29/latvian-mep-linked-to-wallace-and-daly-accused-of-working-with-russian-intelligence/.

- [76] Daniel De Simone, “Five alleged Russian spies appear in London court,” BBC News, September 26, 2023, https://www.bbc.com/news/uk-66923824.

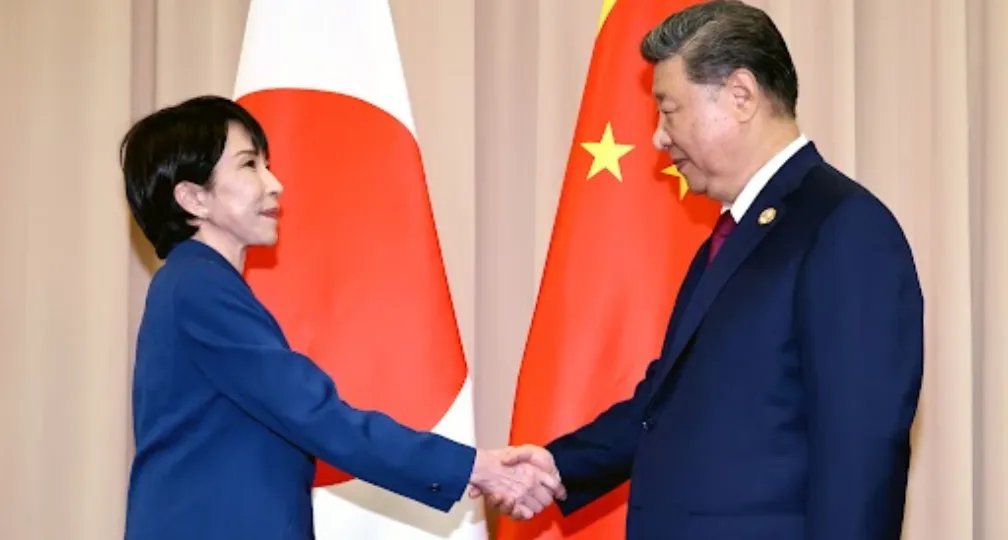

(Photo Credit: Shutterstock/Aflo)

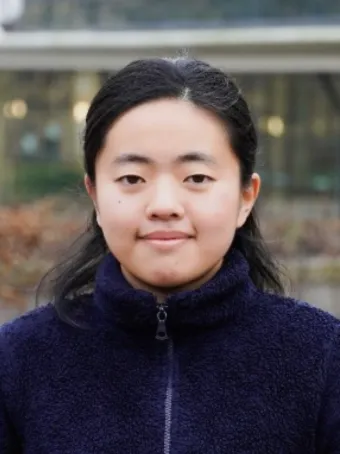

About the Author

Dr Sara Kaizuka FHEA(Part-time Lecturer at Musashino University / Part-time Assistant Lecturer at Kanagawa University / Intern at the IOG)

After receiving her BA in International Liberal Studies from Waseda University, she completed an MA in Politics at the University of Leeds from which she also received her PhD in Politics. At the University of Leeds, she taught International Politics, Comparative Politics, and British Politics. At present, she teaches at Musashino University and works as a part-time lecturer assistant at Kanagawa University.

A dangerous confluence: The intertwined crises of disinformation and democracies: Contents

Introduction

The Definition and Purpose of Disinformation / Democratic Backsliding / Why Should We Care About Disinformation in an Era of Democratic Crisis? / Three Risks of the Spread of Disinformation and Democratic Backsliding / Report Structure

Chapter 1 Hungary: Media Control and Disinformation

Democratic Backsliding: Increased Control over Information Sources Through Media Acquisitions / Disinformation Through the Media and Its Impact: The Refugee Crisis / The Russia-Ukraine War: The Import and Export of Disinformation / The Negative Impact of Disinformation

Chapter 2 Disinformation in the United States: When Distrust Trumps Facts

The Early Years of American Disinformation: / The American Context: Distrust, Past and Present / The Challenge for Newsrooms / Managing Disinformation Through the Spreader & Consumer

Chapter 3 The Engagement Trap and Disinformation in the United Kingdom

Dragging Their Feet: Initial Slow Response to the Disinformation Threat / The Engagement Trap / A New Level of Threat to Democracy / Combatting Disinformation

Conclusion: Disinformation in Japan and How to Deal with It

Disinformation During Elections and Natural Disasters in Japan / Disinformation Policies During Crises: The 2018 Okinawa Gubernatorial Election as Case Study / Disinformation During Crises: The Noto Peninsula Earthquake and Typhoon Jebi / Policy Recommendations

Disclaimer: Please note that the contents and opinions expressed in this report are the personal views of the authors and do not necessarily represent the official views of the International House of Japan or the Institute of Geoeconomics (IOG), to which the authors belong. Unauthorized reproduction or reprinting of the article is prohibited.

-

Attack on Iran: What Comes Next for the Gulf & The Oil Crisis?2026.03.02

Attack on Iran: What Comes Next for the Gulf & The Oil Crisis?2026.03.02 -

The Supreme Court Strikes Down the IEEPA Tariffs: What Happened and What Comes Next?2026.02.27

The Supreme Court Strikes Down the IEEPA Tariffs: What Happened and What Comes Next?2026.02.27 -

Fed-Treasury Coordination as Economic Security Policy2026.02.13

Fed-Treasury Coordination as Economic Security Policy2026.02.13 -

What Takaichi’s Snap Election Landslide Means for Japan’s Defense and Fiscal Policy2026.02.13

What Takaichi’s Snap Election Landslide Means for Japan’s Defense and Fiscal Policy2026.02.13 -

Challenges for Japan During the U.S.-China ‘Truce’2026.02.12

Challenges for Japan During the U.S.-China ‘Truce’2026.02.12

Orbán in the Public Eye: Anti-Ukraine Argument for Delegitimising Brussels2026.02.04

Orbán in the Public Eye: Anti-Ukraine Argument for Delegitimising Brussels2026.02.04 Fed-Treasury Coordination as Economic Security Policy2026.02.13

Fed-Treasury Coordination as Economic Security Policy2026.02.13 India and EU Sign Mother of All Deals2026.02.09

India and EU Sign Mother of All Deals2026.02.09 When Is a Tariff Threat Not a Tariff Threat?2026.01.29

When Is a Tariff Threat Not a Tariff Threat?2026.01.29 Oil, Debt, and Dollars: The Geoeconomics of Venezuela2026.01.07

Oil, Debt, and Dollars: The Geoeconomics of Venezuela2026.01.07