How democratic states are regulating digital platforms

Digital platforms, including search engines, social media and messaging apps, are indispensable infrastructure for today’s democracy. Exchanges on these digital platforms play a crucial role in setting agendas and forming consensus within society.

However, at the same time, digital platforms have been flooded with disinformation, allowing foreign countries to conduct influence operations and leading to widened social divides. This situation could threaten the very foundation of democracy.

While digital platforms have worked as critical infrastructure, most countries do not exert strict oversight over digital platforms as they do with the power industry or financial sector. As a result, solving critical challenges regarding digital platforms is left to the platform operators.

Democratic states have recently been making efforts to regulate tech firms in order to address these problems. This article will present a blueprint and key points that need to be addressed.

Governance of digital platforms

Concerns have often been raised regarding tech companies’ internal control and corporate governance. This applies not only to unlisted companies such as SpaceX, at which one person serves as CEO and the largest shareholder, but also to publicly listed companies.

In the immediate aftermath of Russia’s invasion of Ukraine in February 2022, Meta Platforms temporarily permitted users to make posts that would normally violate their rules on violent speech, including those calling for Russian President Vladimir Putin’s death.

The firm reportedly took the step under the belief that banning such posts during wartime would hamper the unity and resistance of Ukrainian people against Russia. However, it later reverted its policy closer to its original form after facing controversy both within and outside the company.

During the 2016 U.S. presidential election, digital platforms offered a stage for multiple and large-scale interference by Russia, and in the 2020 U.S. presidential election, the platforms became a hotbed for conspiracy theories, including claims of “election fraud.”

Such conspiracy discourse led thousands of supporters of then-U.S. President Donald Trump to storm the U.S. Capitol building in January 2021. Many Big Tech firms, including Twitter (now X), suspended Trump’s accounts on their platforms as his posts were believed to have incited the riots.

Media platforms banning an incumbent U.S. president has major implications. Even then-German Chancellor Angela Merkel, who had clashed with Trump over the years, regarded the companies’ move as problematic.

Digital platform operators have not been able to ensure transparency and foreseeability in their decision-making process that has significant potential impact on democracy, and governments are working to regulate digital platforms under such recognition.

Regulations in Europe

Ahead of other democracies, the European Union enacted two strict and comprehensive pieces of legislation to regulate digital platforms.

The Digital Services Act (DSA) and the Digital Markets Act (DMA) became applicable to all entities on Feb. 17 and March 7 this year, respectively.

The DSA holds digital platform operators legally accountable for cracking down on disinformation, harmful content and illegal activities on their platforms. Specifically, the law urges companies to strengthen their content moderation and protection of users’ fundamental rights, ensure a high level of safety for minors, and improve their transparency and accountability.

The DSA is designed in a four-layer approach to accommodate the various sizes and functions of different online service providers. The most stringent obligations are reserved for very large online platforms and very large online search engines, which have the greatest influence.

Meanwhile, the DMA introduces rules applicable to digital platforms designated to act as “gatekeepers” to prevent them from imposing unfair conditions on businesses and end users.

Gatekeepers are defined as providers of “core platform services” — including app stores, search engines and social media — that meet certain criteria based on factors such as annual turnover, average market capitalization and the number of active users.

The law prohibits gatekeepers from combining data sourced from different core services without consent, bans them from self-preferencing and guarantees the freedom of smaller competitors to conduct business on digital platforms.

In a word, the DMA is an ex-ante regulation to encourage competition on digital platforms.

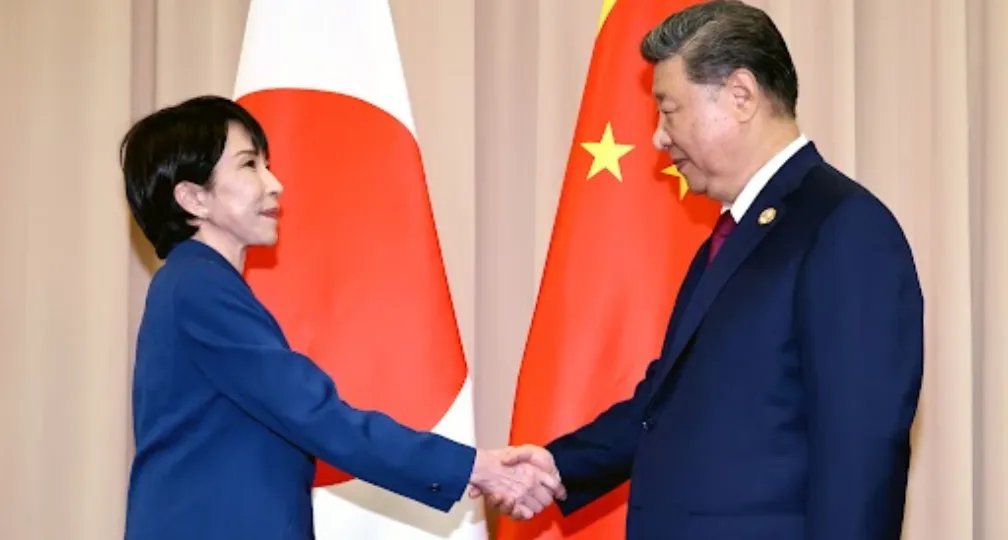

Beijing’s approach to regulating tech firms appears similar to that taken by Brussels but differs in nature.

The Chinese government adopts various ways to oversee and control tech companies. One of the most stringent tools is to acquire so-called “golden shares” or “special management shares,” which grant the government decisive voting rights or veto power over the business decisions specified in the articles of incorporation, even if the government holds stakes as small as 1%.

Such shares can be held only by the Chinese government, and Aynne Kokas, an assistant professor of media studies at the University of Virginia, argues in her book “Trafficking Data” that Beijing is pushing “partial nationalization” of such private firms under the system.

It is believed that the Chinese government has obtained golden shares of Alibaba, ByteDance, DiDi, Tencent and their subsidiaries to tighten its grip over them.

Washington’s approach

However, we shouldn’t come to the conclusion that gaps in digital platform regulations are solely due to the differences in political systems, nor should we assume that the EU-type regulations represent the mainstream in democratic states. The situation varies on both sides of the Atlantic.

Washington’s approach is not to create new laws or revise existing ones, but to cope with issues related to competition brought about by digital platforms in the process of executing the laws.

U.S. President Joe Biden’s administration focused on dominant internet platforms in an executive order on promoting competition in the American economy issued in July 2021.

The U.S. Federal Trade Commission, led by Lina Khan, and the Justice Department’s Antitrust Division have filed lawsuits against Google, Meta, Amazon and Apple for potentially breaching antitrust laws.

The strengthening of law enforcement is strongly influenced by the school of thought referred to as the “New Brandeis” movement. This paradigm emphasizes citizen welfare and represents a marked departure from the traditional and mainstream interpretation of antitrust law focusing on consumer welfare.

Yet, the Biden administration’s law enforcement is limited to competition policies, and it has taken a laissez-faire approach to moderating online content.

Both Democrats and Republicans have proposed regulations for digital platforms regarding content moderation. However, new rules have failed to be enacted due to them clashing with rights such as freedom of expression.

What lies behind this is Section 230 of the Communications Decency Act and related rulings that protect digital platform providers from being held responsible for disinformation and harmful content posted on their sites.

Such an environment has been what has supported the growth of U.S. Big Tech firms.

Voluntary efforts in Japan

Japan does not have comprehensive digital platform regulations like the EU, and the government stresses that tech companies have been making voluntary efforts.

In 2021, Japan enacted the Improving Transparency and Fairness of Digital Platforms Act to ensure transparency and fairness in three areas — online shopping malls, app stores and later digital advertising. The law stipulates that measures to enhance transparency and fairness “shall be based on voluntary and proactive efforts by digital platform providers … by keeping the involvement of the state and other regulations to the minimum necessary.”

The same is true for dealing with disinformation and harmful content.

In February 2020, a study group on digital platform services set up by the internal affairs ministry concluded that it is appropriate for the government to encourage the private sector to implement disinformation countermeasures based on voluntary efforts, while also considering freedom of expression.

There are certainly some changes that have been made to this policy.

The government is set to revise the Provider Liability Limitation Act to require social media platform operators of a certain size to respond more swiftly to requests by users to delete defamatory content and improve their transparency by disclosing guidelines for deleting posts.

However, these law revisions do not indicate a significant departure from the existing policy of relying on companies’ voluntary efforts. They are insufficient in terms of improving the transparency of U.S.-based digital platforms.

Making digital platforms work for democracy

Tokyo, Washington, Brussels and Beijing are taking different approaches to regulating Big Tech firms and digital platforms.

Which approach works best depends on what values are seen as important, including democracy, freedom and national security.

This article will list some points of discussion.

First, maintaining an environment for fair competition on digital platforms and the issue of content moderation are somewhat related but are different in nature. While the former is agreed upon in many democracies, the latter causes great controversy.

Free and open societies and information environments are vulnerable to malicious information manipulation.

On the other hand, excessive government intervention in content moderation can make societies and information environments authoritarian.

The EU’s DSA offers one way to balance between freedom and safety, but it is not a universal model.

Taiwan’s Digital Intermediary Services Act, for which a draft proposal was released in June 2022, was a Taiwanese version of the DSA — a copy and paste of the DSA’s outline and principles — but it was withdrawn, indicating Taiwanese people’s concerns over the risk digital platform regulations could pose to freedom.

Second, simply moderating content is not enough.

Needless to say, it is necessary to identify fake content and the degree of harmfulness in posted videos and texts, and take necessary actions.

However, today’s information manipulation involves not only false information, but also malinformation — accurate but intentionally malicious information — and opinions for which fact-checking is not an option.

To address such information manipulation, platforms must take behavior-centric countermeasures to delete suspicious accounts and restrict unauthorized activities not solely based on the contents themselves, but also on the content posters’ authenticity and methods of dissemination.

In other words, they need to respond to what Meta calls “coordinated inauthentic behavior.”

While fact-checking organizations and citizens can verify the accuracy of contents, only digital platform providers can conduct real-time detection and response to anomalies, suspicious activities or inauthentic behavior.

Third, today’s malicious information manipulation, particularly foreign interference and influence operations, does not exploit only one digital platform.

Online propaganda networks across multiple digital platforms pose severe challenges for tech companies to cope with on their own.

In other words, close coordination across digital platform operators on an operational level is indispensable to containing today’s cross-platform information manipulation.

Fourth, stringent regulations can be enforced during times of crises and extraordinary events, such as wars, pandemics, huge earthquakes or national elections.

As elections are being held in many democracies this year, the technological innovations brought about by large language models and generative artificial intelligence will once again underscore the importance and vulnerability of digital platforms.

At the same time, we will have the opportunity to accelerate debate over how democratic countries make digital platforms better for democracy.

-

Fed-Treasury Coordination as Economic Security Policy2026.02.13

Fed-Treasury Coordination as Economic Security Policy2026.02.13 -

What Takaichi’s Snap Election Landslide Means for Japan’s Defense and Fiscal Policy2026.02.13

What Takaichi’s Snap Election Landslide Means for Japan’s Defense and Fiscal Policy2026.02.13 -

Challenges for Japan During the U.S.-China ‘Truce’2026.02.12

Challenges for Japan During the U.S.-China ‘Truce’2026.02.12 -

India and EU Sign Mother of All Deals2026.02.09

India and EU Sign Mother of All Deals2026.02.09 -

Orbán in the Public Eye: Anti-Ukraine Argument for Delegitimising Brussels2026.02.04

Orbán in the Public Eye: Anti-Ukraine Argument for Delegitimising Brussels2026.02.04

Orbán in the Public Eye: Anti-Ukraine Argument for Delegitimising Brussels2026.02.04

Orbán in the Public Eye: Anti-Ukraine Argument for Delegitimising Brussels2026.02.04 Fed-Treasury Coordination as Economic Security Policy2026.02.13

Fed-Treasury Coordination as Economic Security Policy2026.02.13 When Is a Tariff Threat Not a Tariff Threat?2026.01.29

When Is a Tariff Threat Not a Tariff Threat?2026.01.29 Oil, Debt, and Dollars: The Geoeconomics of Venezuela2026.01.07

Oil, Debt, and Dollars: The Geoeconomics of Venezuela2026.01.07 India and EU Sign Mother of All Deals2026.02.09

India and EU Sign Mother of All Deals2026.02.09