Will generative AI hold power in international relations?

In 2015, Chinese leader Xi Jinping launched Made in China 2025, an industrial policy aimed at making the country a manufacturing superpower by advancing military-civil fusion and upgrading industry as a whole.

The policy focuses on 10 sectors, including next-generation information technology, high-end numerical control machinery and robotics, aerospace and aviation equipment, and new materials.

In 2018, then-U.S. Vice President Mike Pence strongly criticized the Chinese Communist Party for using an arsenal of policies such as forced technology transfer and intellectual property theft to build Beijing’s manufacturing base at the expense of its competitors.

Ever since then, competition and friction have continued between the two superpowers over technologies such as semiconductors and artificial intelligence.

It is not clear whether science and technology are synonymous with power in the arena of international politics, but it is natural to think that states are competing with the intention of converting science and technology into power.

This article will look at generative AI, which has been attracting attention recently, and discuss how it can be converted to international political power.

ChatGPT

ChatGPT, released by U.S. firm OpenAI in November, is an interactive automated response service based on generative AI. It gained 100 million users in two months.

While ChatGPT generates text, other generative AI services such as DALL·E 2, Midjourney and Stable Diffusion have emerged to let users generate images by entering text.

ChatGPT users can give instructions through textual inputs called “prompts” to produce, summarize and translate texts or generate programs.

Generative AI is a type of artificial intelligence system with high conversion capability, including generating programs by processing natural language.

Technologically, ChatGPT is built by combining a large language model (LLM) and reinforcement learning from a human-feedback machine-learning approach, which prevents it from generating unethical content.

What enables ChatGPT to generate high-quality texts is its architecture, called Transformer.

Transformer is made up of an attention mechanism — an approach presented in “Attention is All You Need,” a paper written by Google’s Ashish Vaswani and his team — and a multilayer perceptron, a type of neural network.

An attention mechanism permits the decoder to focus its “attention” on the most relevant parts of the input sequence, while a multilayer perceptron generates outputs that are related to ongoing processing out of inputs trained from a vast amount of data — like long-term memory for humans.

An attention mechanism and a multi-layer perceptron generate texts by repeatedly predicting the next word in a sequence, one after another.

Information warfare and generative AI

ChatGPT can create a certain ideological bias in a model in the process of building it through selection of input data and reinforcement learning from human feedback.

In other words, it uses the current process of eliminating unethical content to make intended ideological bias inherent.

For instance, the LLM created by an authoritarian state will generate a model that ideologically matches its regime.

And since it can interact in a way close to humans, it can be used to make people believe fake information.

Such a characteristic of ChatGPT implies that it can be applied to cognitive warfare, in which states spread information that is favorable to themselves.

In April, the People’s Liberation Army Daily, the official newspaper of the Chinese army, featured a series on how ChatGPT can be used for military purposes.

The series explains that ChatGPT can be used to analyze public opinion, confuse the public with fake information and control people in targeted countries.

Generative AI like ChatGPT is expected to be used to generate disinformation in information warfare, including in the cognitive domain, and could affect future information warfare between countries.

One example of disinformation being spread in an organized manner in the past is the case of Russia’s Internet Research Agency, a company that used to be owned by Yevgeny Prigozhin, who headed Russian mercenary company the Wagner Group.

The Internet Research Agency actively intervened in the 2016 U.S. presidential election through social media.

It accessed 126 million Facebook users and spread a variety of disinformation such as fake comments by presidential election candidate Hillary Clinton.

From now on, generative AI is likely to take over the task of creating disinformation from humans and will engage in the work 24/7.

By using generative AI, it will become possible to set up fake accounts to post images and everyday life quotes like humans do. It will be difficult to determine whether such accounts are fake or not.

There have already been concerns over the spread of disinformation through deepfake technology, which generates images and videos indistinguishable from real ones.

In May, a fake image purporting to show an explosion near the Pentagon was widely shared on social media, causing the stock market to dip temporarily.

If disinformation generated by state or nonstate actors can intervene in elections and the stock market, can we say that generative AI, which is a tool for such actions, constitutes power?

While the technology itself is neutral, if states can use generative AI to control other countries’ public opinion and make an environment favorable to them, it can be described as an aspect of so-called sharp power.

It could be a threat to democratic states’ election systems.

In the Russia-Ukraine war, various information has been sent out from both sides, and it has been difficult to distinguish what is true and false.

On the internet, quantity counts. The information that reaches the top spots of search results or that is posted frequently on social media becomes influential. And generative AI can easily create and spread information in high quantities.

Other applications

Generative AI can be applied not only to information warfare but also to other areas such as robotics, decision support systems and educational training.

Technology to generate videos can be applied to robot motion planning. If it is possible to create videos by typing in sentences, then the videos can be used to navigate robots.

Google, for instance, developed a technology called PaLM-SayCan, which lets users give ambiguous natural language instructions to drive robots to carry out actions.

Google has also conducted robot motion planning using UniPi, a video generating model that learns from massive video data instead of an LLM as used in PaLM-SayCan.

Because an LLM can structure massive amounts of data, it can provide users with enhanced decision-making, and its ability to interact makes it suitable for training humans.

In the military domain directly linked to national prowess, generative AI can be used to control drones and support operational planning and training.

Due to the comprehensiveness of data, operational planning could go through the kind of qualitative changes that humans could not have come up with.

Generative AI can be applied widely, not only in cognitive domains such as generation of disinformation. We can call it an example of science and technology being converted to power.

In August, the U.S. Defense Department announced the establishment of a generative AI task force, recognizing the potential of generative AI to significantly improve intelligence, operational planning and administrative and business processes.

Japan’s strategy

Based on an overview of how generative AI can be applied to information warfare and other domains, we can see the risk of generative AI — if no ethical restrictions are put in place — limitlessly generating disinformation and computer viruses, contaminating the internet on a large scale.

Since generative AI is an all-purpose technology that replaces a part of human thinking, it can be applied to a broad range of areas.

Countries will work to gain a competitive advantage by utilizing generative AI and attempting to monopolize it.

To secure political stability, they will consider developing domestically-made LLMs or setting regulations so that other countries will not intervene in their affairs, culturally or ideologically.

In June, the European Parliament approved the European Union Artificial Intelligence Act aimed at significantly bolstering regulations on the development and use of AI.

And in July, it was reported that the U.S. Federal Trade Commission sent to OpenAI a demand for records about how it addresses risks related to its AI models.

The U.S. government announced in July that it had reached a deal with seven leading companies in AI — Amazon, Anthropic, Google, Inflection, Meta, Microsoft and OpenAI — to develop tools to alert the public when an image, video or text is created by AI.

In August, China put into force a set of interim administrative measures for the management of generative AI services, and is expected to require providers of these services to undergo security assessments and conduct algorithm filing before serving the public.

On the other hand, in May, Japan’s AI strategy council said in its tentative points for discussion that issues related to national security will be subject to discussions by designated divisions based on the need to manage information.

As AI technology can be regarded as power — considering it is used to form public opinion and also impacts robotics — the strategy Japan must take is to develop its own generative AI.

To develop an LLM, it is necessary to nurture engineers and prepare large-scale computational resources and data.

Computational resources no doubt mean high-performance graphic processing units, and the government can offer assistance in securing these.

On the other hand, it is desirable that Japanese companies and university researchers compete to develop the generative AI model itself.

Countries have only just started developing generative AI, and taking the lead in the development and regulation of the technology will become a source of future power.

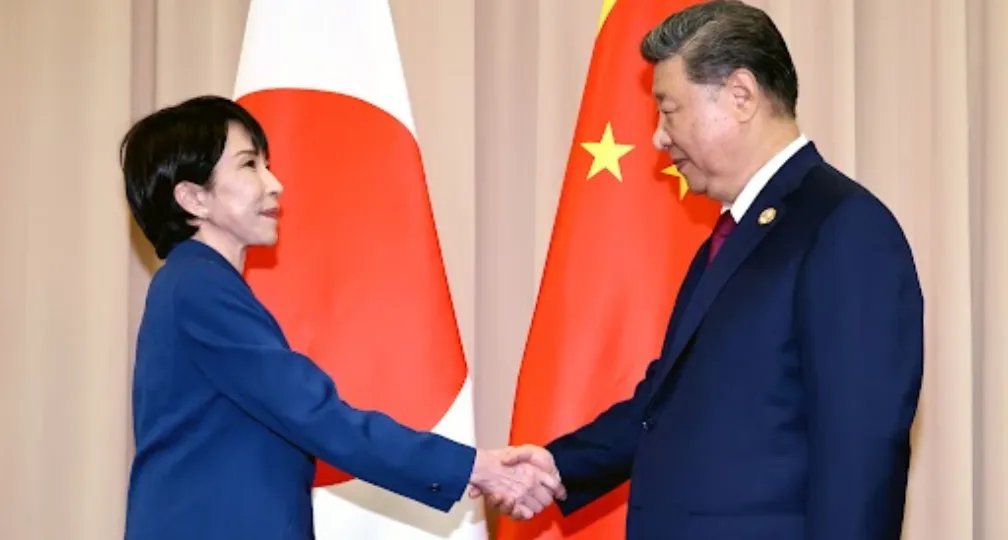

At a meeting of the AI strategy council, Sanae Takaichi, minister in charge of science and technology policy, said discussions should take place on the assumption that once a technology is released, it will continue to be used.

The full picture of generative AI’s possibilities is not yet clear, and that is why countries are exploring the potential of converting it to power.

Japan must not drop out of the competition now.

(Photo Credit: AFP / Aflo)

Geoeconomic Briefing

Geoeconomic Briefing is a series featuring researchers at the IOG focused on Japan’s challenges in that field. It also provides analyses of the state of the world and trade risks, as well as technological and industrial structures (Editor-in-chief: Dr. Kazuto Suzuki, Director, Institute of Geoeconomics (IOG); Professor, The University of Tokyo).

Disclaimer: The opinions expressed in Geoeconomic Briefing do not necessarily reflect those of the International House of Japan, Asia Pacific Initiative (API), the Institute of Geoeconomics (IOG) or any other organizations to which the author belongs.

Group Head, Emerging Technologies,

Director of Management

Makoto Shiono holds a B.A. in Political Science from the Faculty of Law at Keio University and a Master of Laws (LLM) from Washington University (St. Louis) School of Law. He served as a member of the planning committee of the Intellectual Property Strategy Headquarters, Cabinet Office; the Working Group on Key and Strategic Areas, National Standards Strategy Subcommittee, Cabinet Office; and the Working Group of the Green Innovation Project Subcommittee of the Industrial Structure Council. He also participated in drafting the Ethics Guidelines (2017) as a member of the Ethics Committee of the Japanese Society for Artificial Intelligence. [Concurrent Positions] Co-Managing Director & CLO, IGPI Group Director & Managing Director, Industrial Growth Platform, Inc. (IGPI) Member, Startup Investment Committee, Japan Bank for International Cooperation(JBIC) Executive Officer, JBIC IG Partners

View Profile-

Japan’s Sea Lanes and U.S. LNG: Towards Diversification and Stabilization of the Maritime Transportation Routes2026.02.24

Japan’s Sea Lanes and U.S. LNG: Towards Diversification and Stabilization of the Maritime Transportation Routes2026.02.24 -

Fed-Treasury Coordination as Economic Security Policy2026.02.13

Fed-Treasury Coordination as Economic Security Policy2026.02.13 -

What Takaichi’s Snap Election Landslide Means for Japan’s Defense and Fiscal Policy2026.02.13

What Takaichi’s Snap Election Landslide Means for Japan’s Defense and Fiscal Policy2026.02.13 -

Challenges for Japan During the U.S.-China ‘Truce’2026.02.12

Challenges for Japan During the U.S.-China ‘Truce’2026.02.12 -

India and EU Sign Mother of All Deals2026.02.09

India and EU Sign Mother of All Deals2026.02.09

Orbán in the Public Eye: Anti-Ukraine Argument for Delegitimising Brussels2026.02.04

Orbán in the Public Eye: Anti-Ukraine Argument for Delegitimising Brussels2026.02.04 Fed-Treasury Coordination as Economic Security Policy2026.02.13

Fed-Treasury Coordination as Economic Security Policy2026.02.13 When Is a Tariff Threat Not a Tariff Threat?2026.01.29

When Is a Tariff Threat Not a Tariff Threat?2026.01.29 Oil, Debt, and Dollars: The Geoeconomics of Venezuela2026.01.07

Oil, Debt, and Dollars: The Geoeconomics of Venezuela2026.01.07 India and EU Sign Mother of All Deals2026.02.09

India and EU Sign Mother of All Deals2026.02.09